Flavio Martinelli

EPFL, Lausanne, Switzerland (CH)

Rare pic of me well dressed

I am a PhD student in the Laboratory of Computational Neuroscience at EPFL, supervised by Wulfram Gerstner and Johanni Brea.

My main research revolves around understanding weight structures in neural networks.

I apply this knowledge on the following topics:

🕵️ Identifiability: can network parameters be reverse engineered? What types of constraints are needed? What are the implications for neural circuits?

🏋️ Trainability: what weight structures are easier or harder to learn? What are the weight structures of sub-optimal solutions (local minima)?

💡 Interpretability: understanding network symmetries to improve manipulation and interpretation of network models.

latest posts

news

| Dec 09, 2025 | PAPER 📝 Our work on in-memory computing with memristive devices is out in Nature Machine Intelligence! We push the boundaries of what’s computable in-memory, delegating both inference, error computation and error updates on the hardware side to minimize slow and inefficient cross-talk with a generic CPU. Have a look at Actor–critic networks with analogue memristors mimicking reward-based learning |

|---|---|

| Dec 01, 2025 | POSTER 🏞️ In San Diego for NeurIPS ‘25, presenting our Flat Channels to Infinity, Degeneracy in RNNs and Augmentation Techniques for Expand-and-Cluster. |

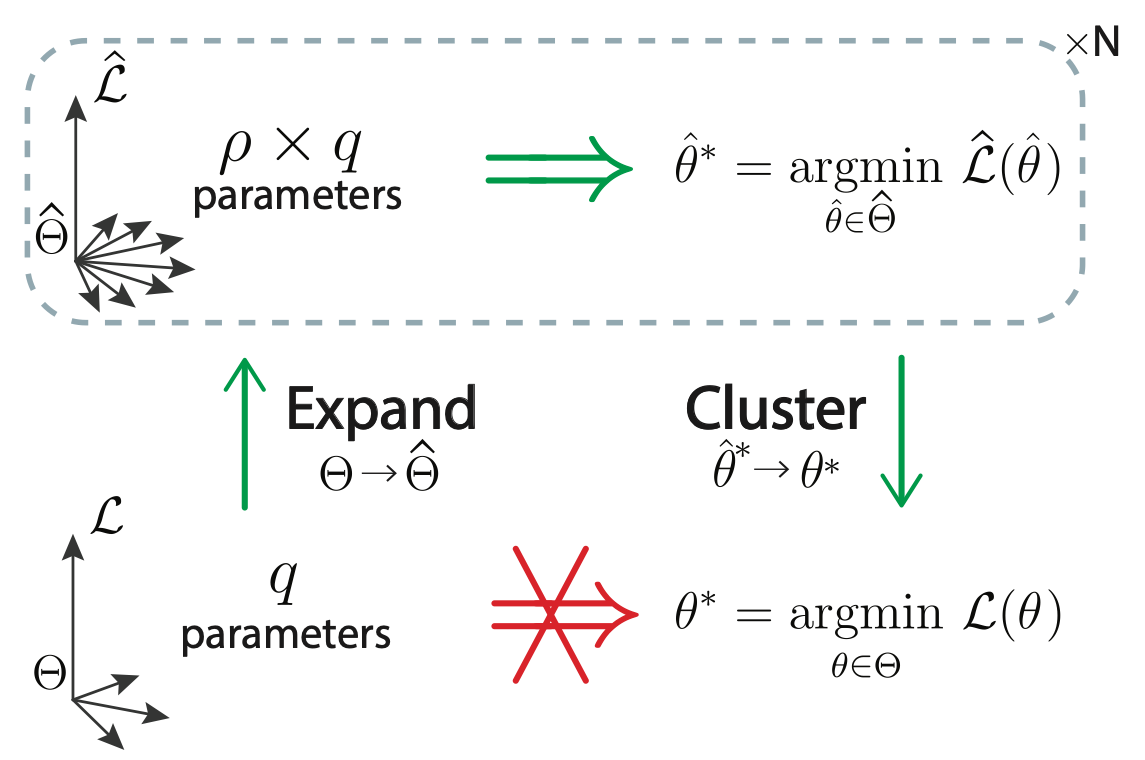

| Nov 25, 2025 | PAPER 📝 Our extension of Expand-and-Cluster for overparameterized networks have been accepted at the NeurIPS UniReps workshop. We come up with ways to augment data specifically to extract informative signals from black-box networks. Check out Data Augmentation Techniques to Reverse-Engineer Neural Network Weights from Input-Output Queries. |

| Oct 01, 2025 | POSTER 🏞️ In Frankfurt for two posters on RNN solution degeneracy and Toy models of identifiability for neuroscience at the Bernstein conference. |

| Sep 15, 2025 | PAPER 📝 Measuring and Controlling Solution Degeneracy across Task-Trained Recurrent Neural Networks has been accepted to NeurIPS as spotlight! |

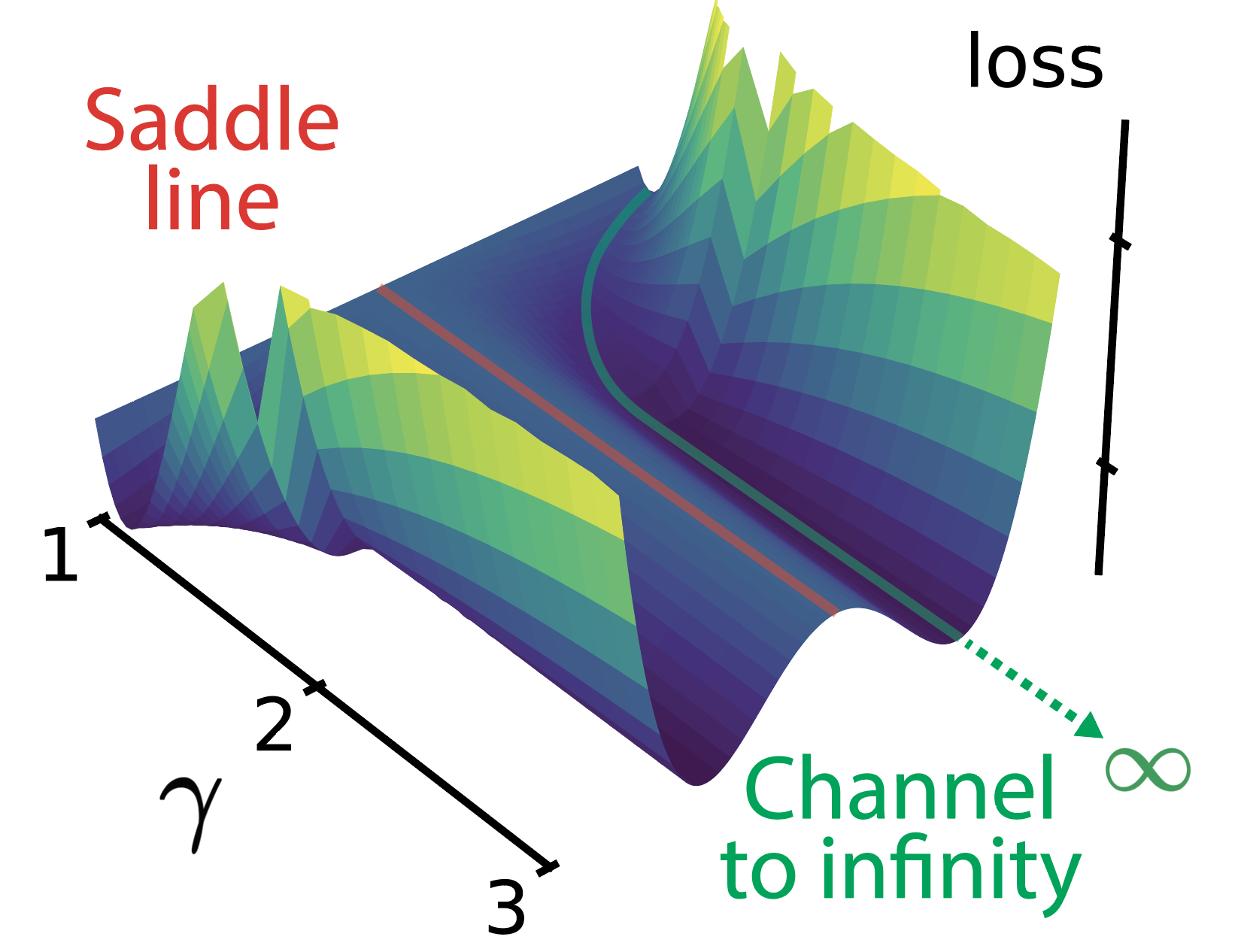

| Sep 15, 2025 | PAPER 📝 Flat Channels to Infinity in Neural Loss Landscapes has been accepted to NeurIPS for a poster! |

| Sep 03, 2025 | TALK 🎤 Gave a talk about the channels to infinity in loss landscapes in the Ploutos platform - link to video |

| Aug 03, 2025 | 🌎 Currently in Woods Hole, MA (US) for the MIT Brain, Minds and Machines summer school. |